Lean-auto is an interface between Lean and automated theorem provers. Up to now, lean-auto is maintained and developed primarily by Yicheng Qian (GitHub: PratherConid). It is currently in active development, and pull requests/issues are welcome. For more information, feel free to reach out to Yicheng Qian on Lean Zulip.

Lean-auto is based on a monomorphization procedure from dependent type theory to higher-order logic and a deep embedding of higher-order logic into dependent type theory. It is capable of handling dependently-typed and/or universe-polymorphic input terms. Currently, proof reconstruction can be handled by Duper, a higher-order superposition prover written in Lean. To enable Duper, please import the duper repo in your project, and set the following options:

import Auto.Tactic

import Duper.Tactic

open Lean Auto in

def Auto.duperRaw (lemmas : Array Lemma) (inhs : Array Lemma) : MetaM Expr := do

let lemmas : Array (Expr × Expr × Array Name × Bool) ← lemmas.mapM

(fun ⟨⟨proof, ty, _⟩, _⟩ => do return (ty, ← Meta.mkAppM ``eq_true #[proof], #[], true))

Duper.runDuper lemmas.toList [] 0

attribute [rebind Auto.Native.solverFunc] Auto.duperRaw

set_option auto.native true

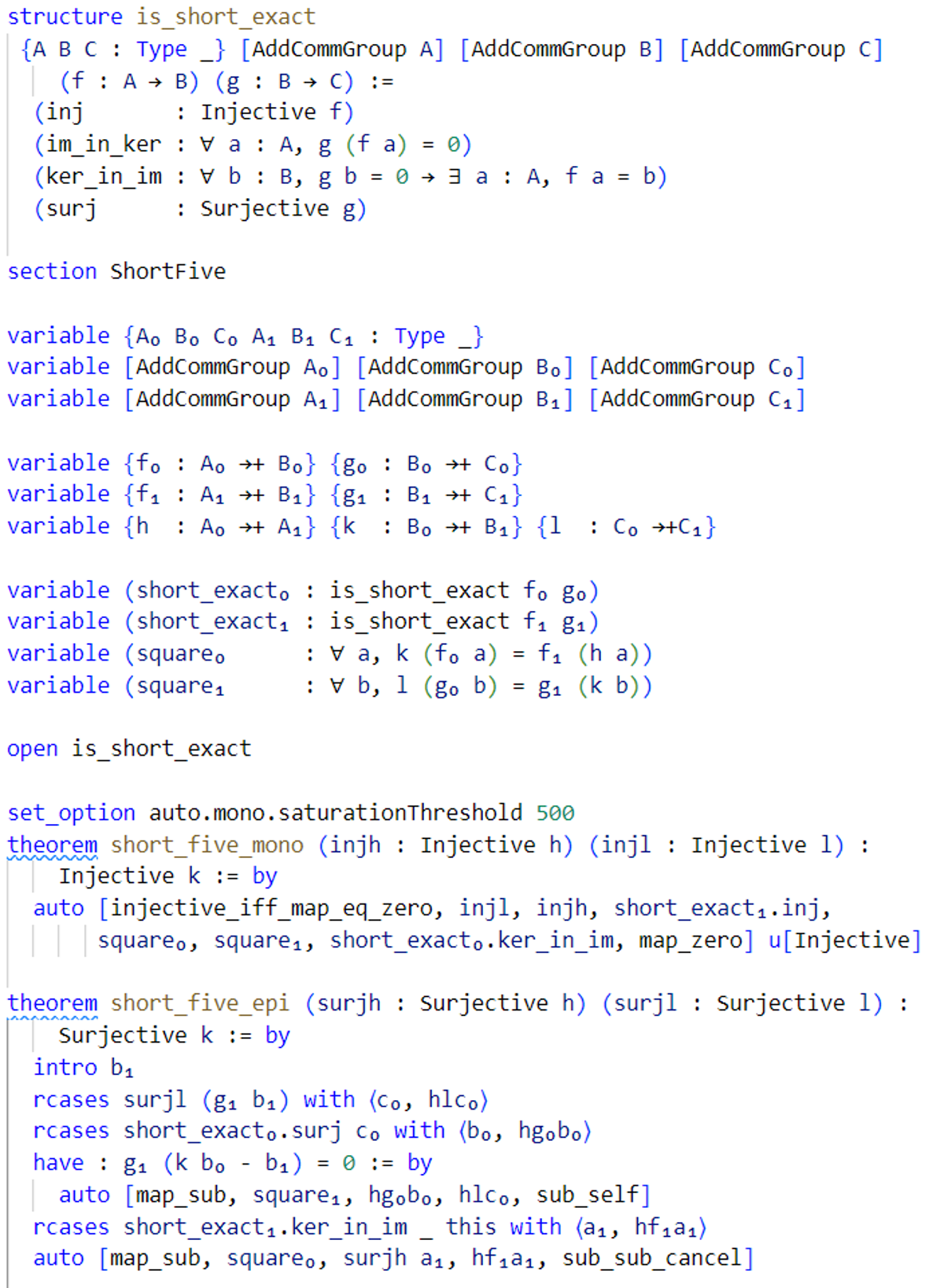

Although Lean-auto is still under development, it's already able to solve nontrivial problems. For example the first part of the "snake lemma" in category theory can be solved by a direct invocation to auto (and the second part can also be partly automated):

Type "auto 👍" to test whether auto is set up.

Usage

auto [<term>,*] u[<ident>,*] d[<ident>,*]u[<ident>,*]: Unfold identifiersd[<ident>,*]: Add definitional equality related to identifiers

- The file

Test/Test_Regression.leanin this repo contains working examples of usages oflean-auto. Note that thenativemode inTest/Test_Regressionuses a dummy native solver, but this is only for testing. In real use cases, you should replace the dummy solver with a native theorem prover such asduper. See below for detailed instructions. - Currently, auto supports

- SMT solver invocation:

set_option auto.smt true, but without proof reconstruction. Make sure that SMT solvers are installed, and thatauto.smt.solver.nameis correctly set. If you want to try - TPTP solver invocation:

set_option auto.tptp true, but without proof reconstruction. Currently, we only support zipperposition. Make sure thatauto.tptp.solver.nameandauto.tptp.zeport.pathare correctly set. - Proof search by native prover. To enable proof search by native prover, use

set_option auto.native true, and useattribute [rebind Auto.Native.solverFunc] <solve_interface>to bindlean-autoto the interface of the solver, which should be a Lean constant of typeArray Lemma → Array Lemma → MetaM Expr.

- SMT solver invocation:

Installing Lean-auto

z3version >= 4.12.2. Lower versions may not be able to deal with smt-lib 2.6 string escape sequence.cvc5zipperpositionportfolio mode

Troubleshooting

Monomorphization failed because ...:

Try addingset_option auto.mono.ignoreNonQuasiHigherOrder truebefore you invokeautoDuper Saturatedwhen usinglean-auto + duper:

This can happen ifThe list of hints you provided to autois insufficientduperis unable to prove the translated problem, although the translated problem is provableautois unable to handle certain Lean features in the problem

To find out if the issue is caused by, try proving the goal manually using only the basic tactics (e.g. rw,apply,simp only, anddsimp only) and the hints you provided tolean-auto. Try not to usesimp,simp_allandgrindbecause they might automatically use theorems that are not within the hint list provided to them. If you're able to prove the goal manually, it means that the list of hints you provided is sufficient.

To find out if the issue is caused by, try using lean-auto + SMT solverandlean-auto + TPTP solverand see if they're able to solve the goal. Note that even if the goal is still not solved, it does not necessarily mean that the translated problem is unprovable.

Utilities

- Command

#getExprAndApply [ <term> | <ident> ]: Defined inExprExtra.lean. This command first elaborates the<term>into a leanExpr, then applies function<ident>toExpr. The constantidentmust be already declared and be of typeExpr → TermElabM Unit - Command

#genMonadState <term>, #genMonadContext <term>: Defined inMonadUtils.lean. Refer to the comment at the beginning ofMonadUtils.lean. - Command

#fromMetaTactic [<ident>]: CallsTactic.liftMetaTacticon<ident>. The constant<ident>must be already declared and be of typeMVarId → MetaM (List MVarId) - Lexical Analyzer Generator:

Parser/LeanLex.lean. The frontend is not yet implemented. The backend can be found inNDFA.lean.

Translation Workflow (Tentative)

- Collecting assumptions from local context and user-provided facts

- We reduce

letbinders and unfold projections when we collect assumptions. So, in the following discussion, we'll assume that the expression contains noletbinders and noprojs. - We also

reduce user provided facts so that there are nothing like

- We reduce

: Collecting constructors and recursors for inductive types (effectively, not directly) - e.g collecting equational theorem for match constructs

- e.g collect constructors for inductively defined propositions

: Monomorphization - Monomorphize all (dependently typed/universe polymorphic) facts to higher-order universe-monomorphic facts

stands for "constant universe level" - Note that at this stage, all the facts we've obtained are still valid

expressions and has convenient CIC proofs from the assumptions.

- We want all types

occuring in the signature of constants and variables to be of sort Type (u + 1), i.e.,. This is necessary because we want to write a checker (instead of directly reconstructing proof in DTT) and the valuation function from less expressive logic to dependent type theory requires [the elements in the range of the valuation function] to be [of the same sort]. - To do this, we use

GLift. For example,Nat.addis transformed intoNat.addLiftstructure GLift.{u, v} (α : Sort u) : Sort (max u (v + 1)) where /-- Lift a value into `GLift α` -/ up :: /-- Extract a value from `GLift α` -/ down : α def Nat.addLift.{u} (x y : GLift.{1, u} Nat) := GLift.up (Nat.add (GLift.down x) (GLift.down y)) - We only transfer these "lifted" terms to the less expressive

, and is unaware of the universe levels wrapped inside GLift.up. - Lifted constantes should be introduced into the local context. Theorems corresponding to the original one but using only lifted constants and with uniform universe levels, should also be introduced into the local context. Later translations should only use theorems and constants with uniform universe levels.

- We want all types

: Instantiating function arguments is the reified

- There should also be a process similar to ULifting that "lifts" Bool into Prop, Nat to Int

Reification

Auto/Translation/LamReif.lean

Auto/Translation/LamFOL2SMT.lean

Checker

- The checker is based on a deep embedding of simply-typed lambda calculus into dependent type theory.

- The checker is slow on large input. For example, it takes

6sto typecheck the final example inBinderComplexity.lean. However, this is probably acceptable for mathlib usages, because e.gMathlib/Analysis/BoxIntegral/DivergenceTheorem.leanhas two theorems that take4sto compile (but a large portion of the4sare spent on typeclass inference)

Rules in Proof Tree

defeq <num> <name>: The<num>-th definitional equality associated with definition<name>hw <name>: Lemmas hard-wired into Lean-autolctxInh: Inhabitation fact from local contextlctxLem: Lemma from local contextrec <indName>.<ctorName>rw [0, 1]: Rewrite0using1(1must be an equality)tyCanInh: Inhabitation instance synthesized for canonicalized typeciInstDefEq: Definitional equality resulting from instance relations betweenConstInststermLikeDefEq: Definitional equality resulting from definitional equalities between term-like subexpressions❰<term>❱: User-provided lemma<term>queryNative::<func_name>: Proved by native prover